Overview

Rancher is a container management platform built for organizations that deploy containers in production. Rancher makes it easy to run Kubernetes everywhere, meet IT requirements, and empower DevOps teams.

Run Kubernetes Everywhere

Kubernetes has become the container orchestration standard. Most cloud and virtualization vendors now offer it as standard infrastructure. Rancher users have the choice of creating Kubernetes clusters with Rancher Kubernetes Engine (RKE) or cloud Kubernetes services, such as GKE, AKS, and EKS. Rancher users can also import and manage their existing Kubernetes clusters created using any Kubernetes distribution or installer.

Meet IT Requirements

Rancher supports centralized authentication, access control, and monitoring for all Kubernetes clusters under its control. For example, you can:

- Use your Active Directory credentials to access Kubernetes clusters hosted by cloud vendors, such as GKE.

- Setup and enforce access control and security policies across all users, groups, projects, clusters, and clouds.

- View the health and capacity of your Kubernetes clusters from a single-pane-of-glass.

Empower DevOps Teams

Rancher provides an intuitive user interface for DevOps engineers to manage their application workload. The user does not need to have in-depth knowledge of Kubernetes concepts to start using Rancher. Rancher catalog contains a set of useful DevOps tools. Rancher is certified with a wide selection of cloud native ecosystem products, including, for example, security tools, monitoring systems, container registries, and storage and networking drivers.

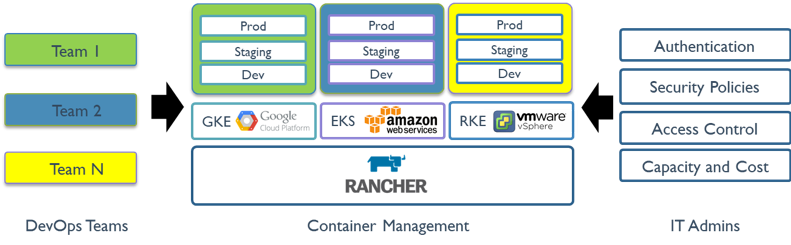

The following figure illustrates the role Rancher plays in IT and DevOps organizations. Each team deploys their applications on the public or private clouds they choose. IT administrators gain visibility and enforce policies across all users, clusters, and clouds.

Features of the Rancher API Server

The Rancher API server is built on top of an embedded Kubernetes API server and an etcd database. It implements the following functionalities:

Authorization and Role-Based Access Control

- User management: The Rancher API server manages user identities that correspond to external authentication providers like Active Directory or GitHub, in addition to local users.

- Authorization: The Rancher API server manages access control and security standards.

Working with Kubernetes

- Provisioning Kubernetes clusters: The Rancher API server can provision Kubernetes on existing nodes, or perform Kubernetes upgrades.

- Catalog management: Rancher provides the ability to use a catalog of Helm charts that make it easy to repeatedly deploy applications.

- Managing projects: A project is a group of multiple namespaces and access control policies within a cluster. A project is a Rancher concept, not a Kubernetes concept, which allows you to manage multiple namespaces as a group and perform Kubernetes operations in them. The Rancher UI provides features for project administration and for managing applications within projects.

- Fleet Continuous Delivery: Within Rancher, you can leverage Fleet Continuous Delivery to deploy applications from git repositories, without any manual operation, to targeted downstream Kubernetes clusters.

- Istio: Our integration with Istio is designed so that a Rancher operator, such as an administrator or cluster owner, can deliver Istio to developers. Then developers can use Istio to enforce security policies, troubleshoot problems, or manage traffic for green/blue deployments, canary deployments, or A/B testing.

Working with Cloud Infrastructure

- Tracking nodes: The Rancher API server tracks identities of all the nodes in all clusters.

- Setting up infrastructure: When configured to use a cloud provider, Rancher can dynamically provision new nodes and persistent storage in the cloud.

Cluster Visibility

- Logging: Rancher can integrate with a variety of popular logging services and tools that exist outside of your Kubernetes clusters.

- Monitoring: Using Rancher, you can monitor the state and processes of your cluster nodes, Kubernetes components, and software deployments through integration with Prometheus, a leading open-source monitoring solution.

- Alerting: To keep your clusters and applications healthy and driving your organizational productivity forward, you need to stay informed of events occurring in your clusters and projects, both planned and unplanned.

Editing Downstream Clusters with Rancher

The options and settings available for an existing cluster change based on the method that you used to provision it. For example, only clusters provisioned by RKE have Cluster Options available for editing.

After a cluster is created with Rancher, a cluster administrator can manage cluster membership or manage node pools, among other options.

The following table summarizes the options and settings available for each cluster type:

| Action | Rancher Launched Kubernetes Clusters | EKS, GKE and AKS Clusters1 | Other Hosted Kubernetes Clusters | Non-EKS or GKE Registered Clusters |

|---|---|---|---|---|

| Using kubectl and a kubeconfig file to Access a Cluster | ✓ | ✓ | ✓ | ✓ |

| Managing Cluster Members | ✓ | ✓ | ✓ | ✓ |

| Editing and Upgrading Clusters | ✓ | ✓ | ✓ | ✓2 |

| Managing Nodes | ✓ | ✓ | ✓ | ✓3 |

| Managing Persistent Volumes and Storage Classes | ✓ | ✓ | ✓ | ✓ |

| Managing Projects, Namespaces and Workloads | ✓ | ✓ | ✓ | ✓ |

| Using App Catalogs | ✓ | ✓ | ✓ | ✓ |

| Configuring Tools (Alerts, Notifiers, Monitoring, Logging, Istio) | ✓ | ✓ | ✓ | ✓ |

| Running Security Scans | ✓ | ✓ | ✓ | ✓ |

| Ability to rotate certificates | ✓ | ✓ | ||

| Ability to backup and restore Rancher-launched clusters | ✓ | ✓ | ✓4 | |

| Cleaning Kubernetes components when clusters are no longer reachable from Rancher | ✓ |

Registered EKS, GKE and AKS clusters have the same options available as EKS, GKE and AKS clusters created from the Rancher UI. The difference is that when a registered cluster is deleted from the Rancher UI, it is not destroyed.

Cluster configuration options can't be edited for registered clusters, except for K3s and RKE2 clusters.

For registered cluster nodes, the Rancher UI exposes the ability to cordon, drain, and edit the node.

For registered clusters using etcd as a control plane, snapshots must be taken manually outside of the Rancher UI to use for backup and recovery.