5. Schedule Your Services

In v1.6, objects called services were used to schedule containers to your cluster hosts. Services included the Docker image for an application, along with configuration settings for a desired state.

In Rancher v2.x, the equivalent object is known as a workload. Rancher v2.x retains all scheduling functionality from v1.6, but because of the change from Cattle to Kubernetes as the default container orchestrator, the terminology and mechanisms for scheduling workloads has changed.

Workload deployment is one of the more important and complex aspects of container orchestration. Deploying pods to available shared cluster resources helps maximize performance under optimum compute resource use.

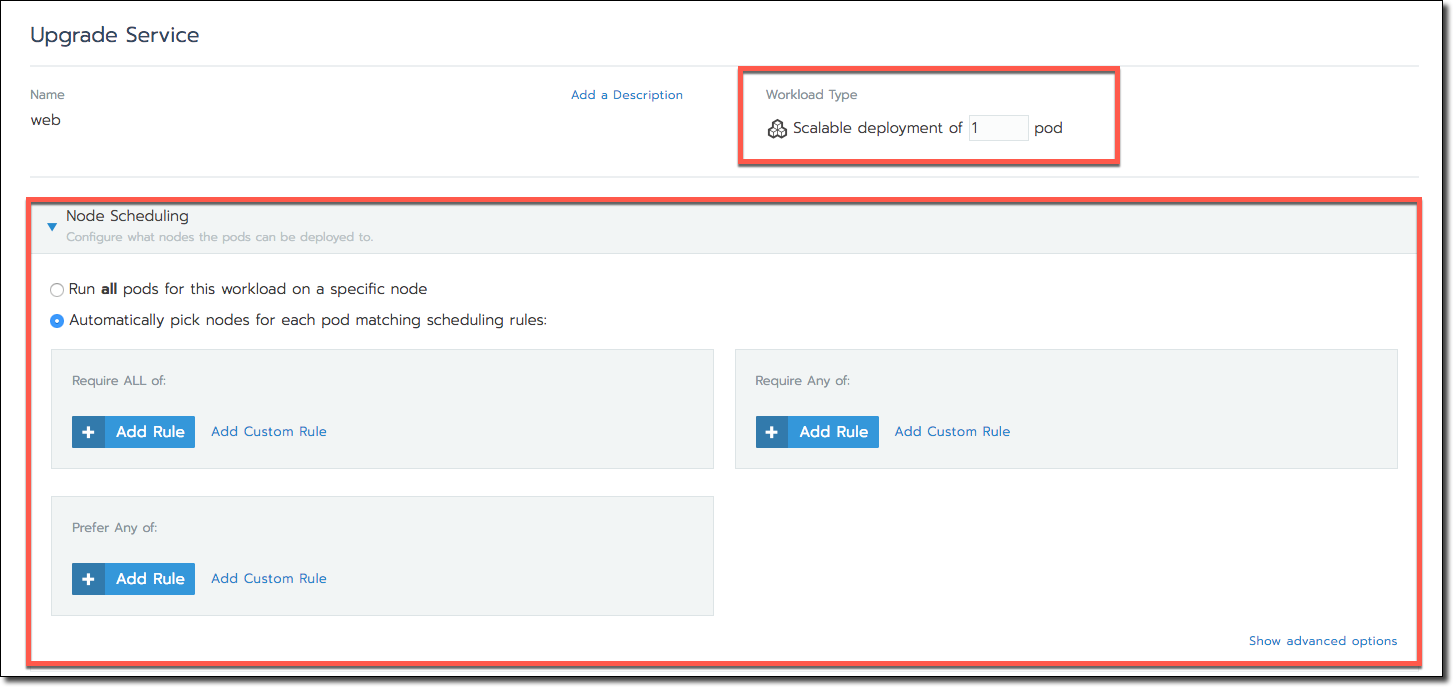

You can schedule your migrated v1.6 services while editing a deployment. Schedule services by using Workload Type and Node Scheduling sections, which are shown below.

What's Different for Scheduling Services?

Rancher v2.x retains all methods available in v1.6 for scheduling your services. However, because the default container orchestration system has changed from Cattle to Kubernetes, the terminology and implementation for each scheduling option has changed.

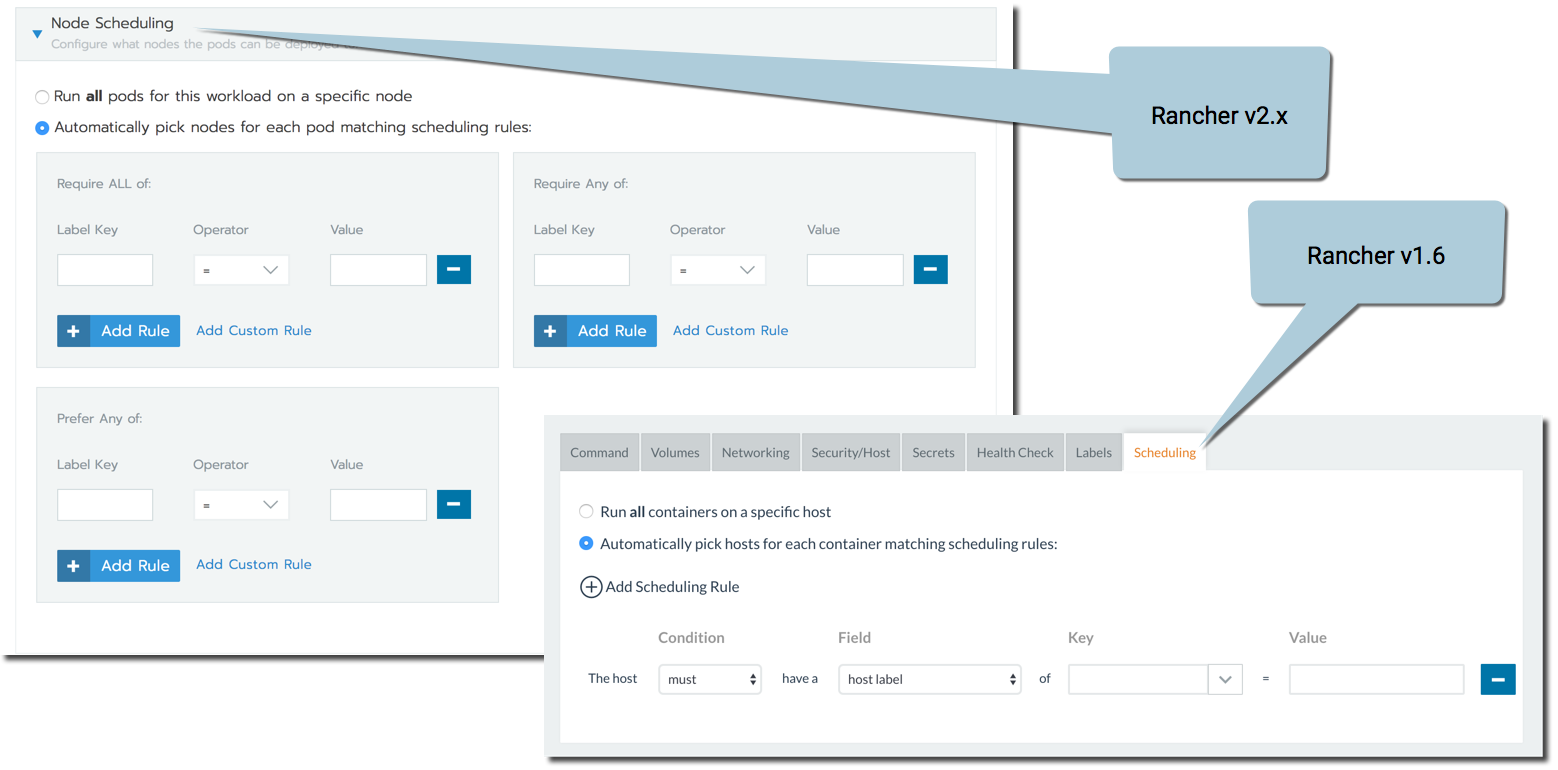

In v1.6, you would schedule a service to a host while adding a service to a Stack. In Rancher v2.x., the equivalent action is to schedule a workload for deployment. The following composite image shows a comparison of the UI used for scheduling in Rancher v2.x versus v1.6.

Node Scheduling Options

Rancher offers a variety of options when scheduling nodes to host workload pods (i.e., scheduling hosts for containers in Rancher v1.6).

You can choose a scheduling option as you deploy a workload. The term workload is synonymous with adding a service to a Stack in Rancher v1.6). You can deploy a workload by using the context menu to browse to a cluster project (<CLUSTER> > <PROJECT> > Workloads).

The sections that follow provide information on using each scheduling options, as well as any notable changes from Rancher v1.6. For full instructions on deploying a workload in Rancher v2.x beyond just scheduling options, see Deploying Workloads.

Schedule a certain number of pods

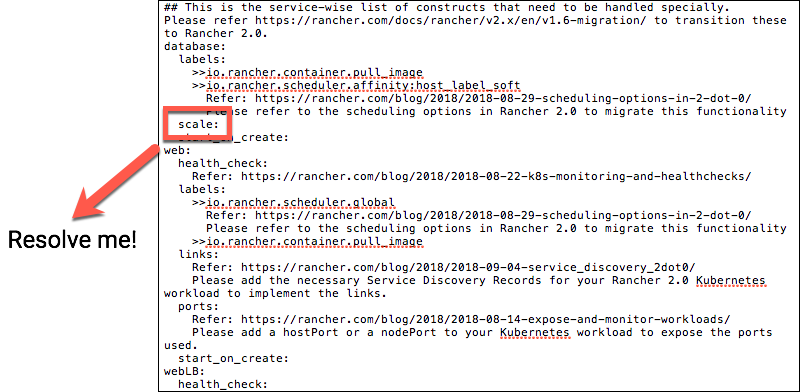

In v1.6, you could control the number of container replicas deployed for a service. You can schedule pods the same way in v2.x, but you'll have to set the scale manually while editing a workload.

During migration, you can resolve scale entries in output.txt by setting a value for the Workload Type option Scalable deployment depicted below.

Scheduling Pods to a Specific Node

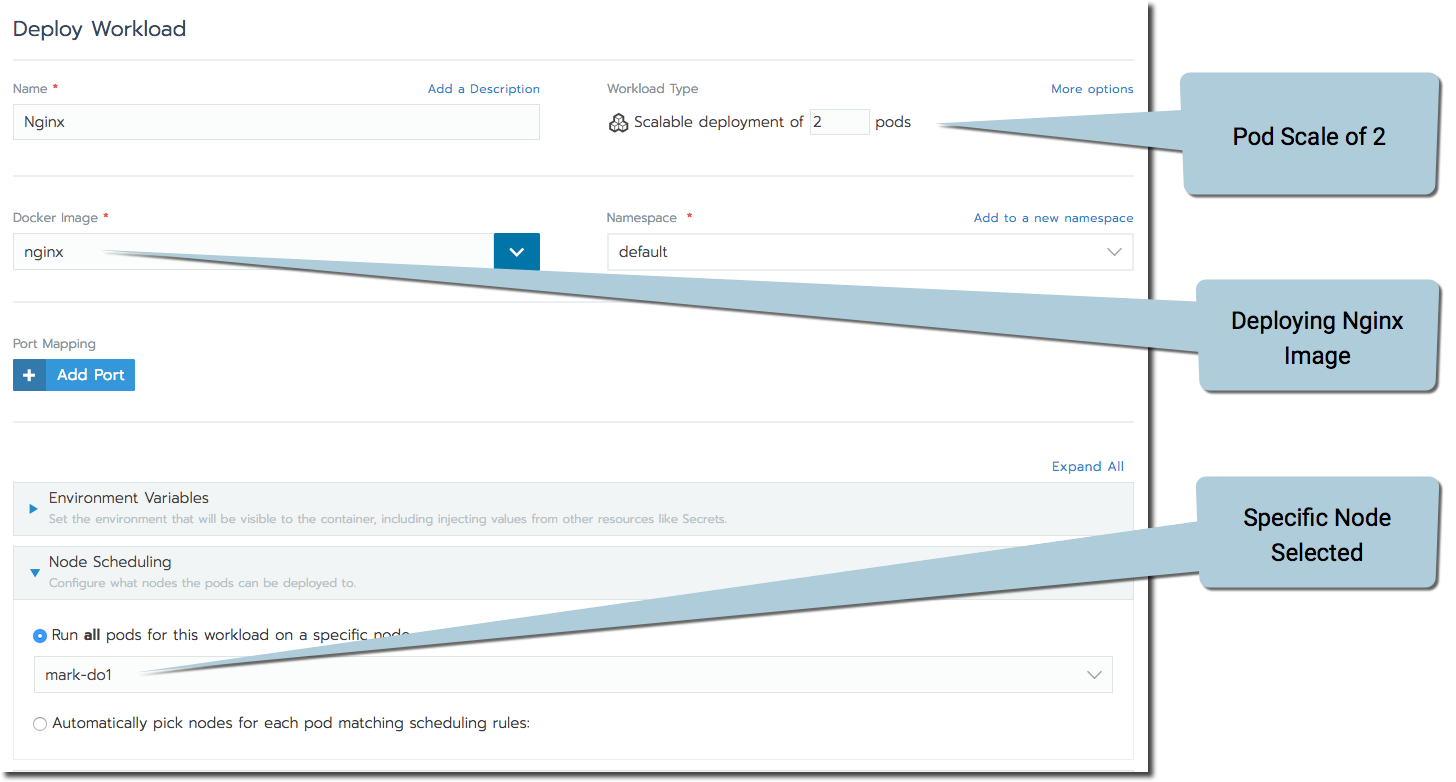

Just as you could schedule containers to a single host in Rancher v1.6, you can schedule pods to single node in Rancher v2.x

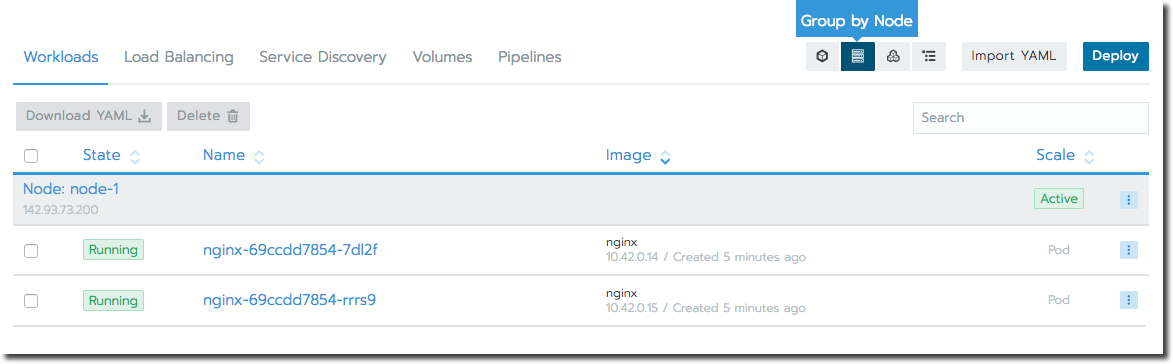

As you deploy a workload, use the Node Scheduling section to choose a node to run your pods on. The workload below is being scheduled to deploy an Nginx image with a scale of two pods on a specific node.

Rancher schedules pods to the node you select if 1) there are compute resource available for the node and 2) you've configured port mapping to use the HostPort option, that there are no port conflicts.

If you expose the workload using a NodePort that conflicts with another workload, the deployment gets created successfully, but no NodePort service is created. Therefore, the workload isn't exposed outside of the cluster.

After the workload is created, you can confirm that the pods are scheduled to your chosen node. From the project view, click Resources > Workloads. (In versions before v2.3.0, click the Workloads tab.) Click the Group by Node icon to sort your workloads by node. Note that both Nginx pods are scheduled to the same node.

Scheduling Using Labels

In Rancher v2.x, you can constrain pods for scheduling to specific nodes (referred to as hosts in v1.6). Using labels, which are key/value pairs that you can attach to different Kubernetes objects, you can configure your workload so that pods you've labeled are assigned to specific nodes (or nodes with specific labels are automatically assigned workload pods).

| Label Object | Rancher v1.6 | Rancher v2.x |

|---|---|---|

| Schedule by Node? | ✓ | ✓ |

| Schedule by Pod? | ✓ | ✓ |

Applying Labels to Nodes and Pods

Before you can schedule pods based on labels, you must first apply labels to your pods or nodes.

Hooray! All the labels that you manually applied in Rancher v1.6 (but not the ones automatically created by Rancher) are parsed by migration-tools CLI, meaning you don't have to manually reapply labels.

To apply labels to pods, make additions to the Labels and Annotations section as you configure your workload. After you complete workload configuration, you can view the label by viewing each pod that you've scheduled. To apply labels to nodes, edit your node and make additions to the Labels section.

Label Affinity/AntiAffinity

Some of the most-used scheduling features in v1.6 were affinity and anti-affinity rules.

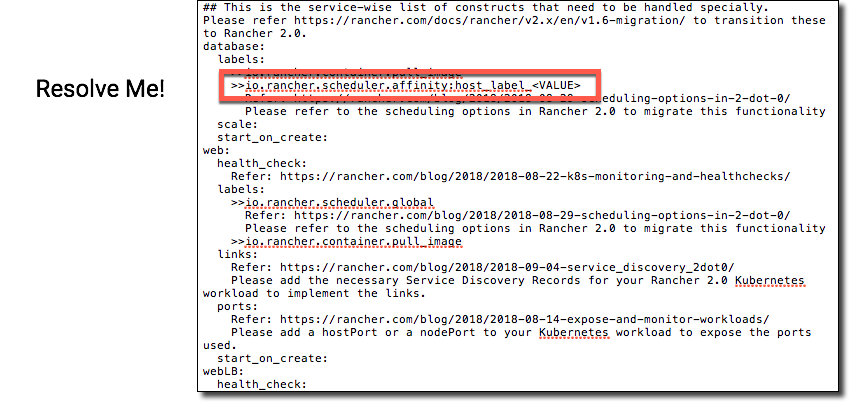

output.txt Affinity Label

Affinity

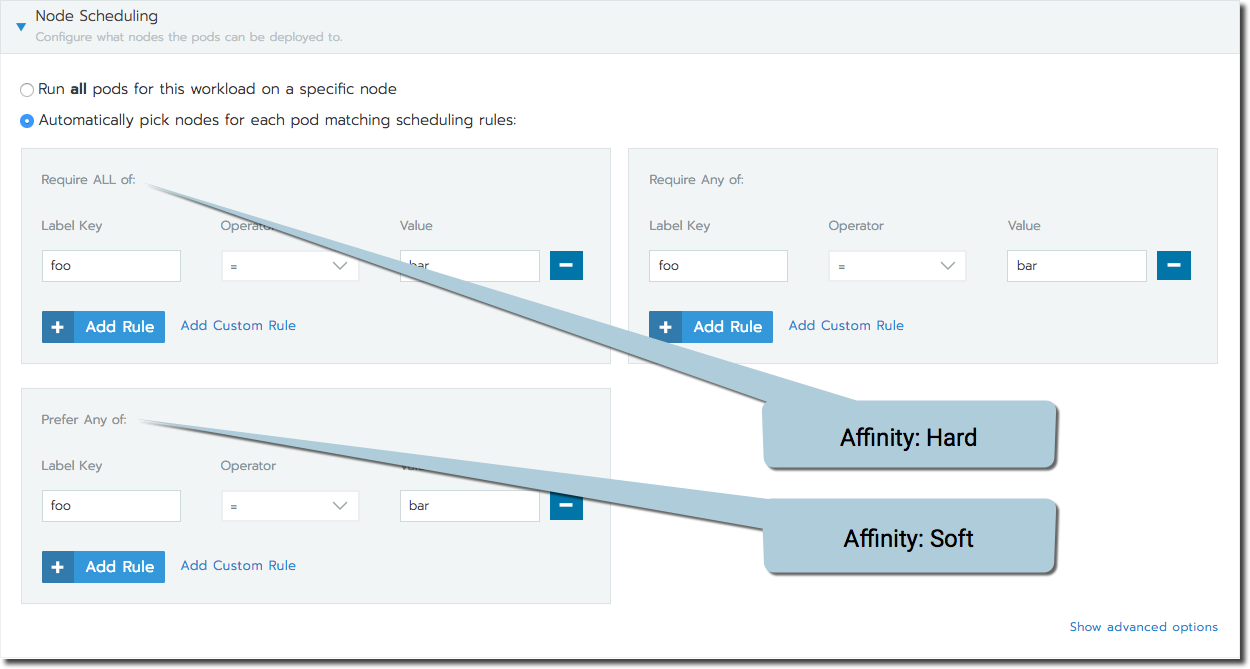

Any pods that share the same label are scheduled to the same node. Affinity can be configured in one of two ways:

Affinity Description Hard A hard affinity rule means that the host chosen must satisfy all the scheduling rules. If no such host can be found, the workload will fail to deploy. In the Kubernetes manifest, this rule translates to the nodeAffinitydirective.

To use hard affinity, configure a rule using the Require ALL of section (see figure below).Soft Rancher v1.6 user are likely familiar with soft affinity rules, which try to schedule the deployment per the rule, but can deploy even if the rule is not satisfied by any host.

To use soft affinity, configure a rule using the Prefer Any of section (see figure below).

AntiAffinity

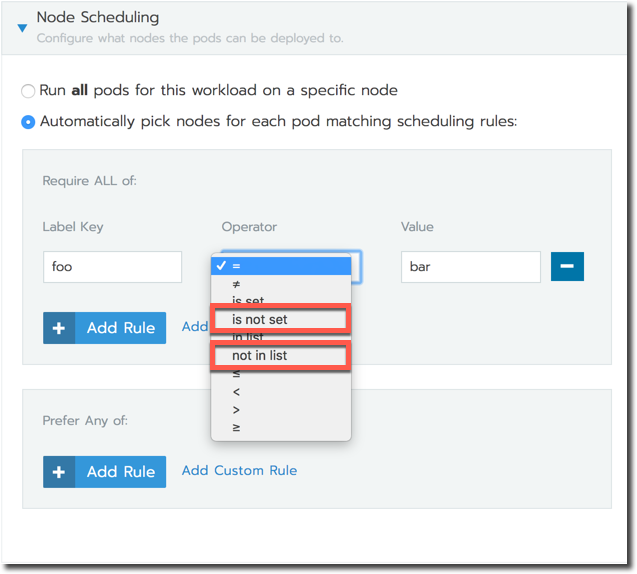

Any pods that share the same label are scheduled to different nodes. In other words, while affinity attracts a specific label to each other, anti-affinity repels a label from itself, so that pods are scheduled to different nodes.

You can create an anti-affinity rules using either hard or soft affinity. However, when creating your rule, you must use either the

is not setornot in listoperator.For anti-affinity rules, we recommend using labels with phrases like

NotInandDoesNotExist, as these terms are more intuitive when users are applying anti-affinity rules.AntiAffinity Operators

Detailed documentation for affinity/anti-affinity is available in the Kubernetes Documentation.

Affinity rules that you create in the UI update your workload, adding pod affinity/anti-affinity directives to the workload Kubernetes manifest specs.

Preventing Scheduling Specific Services to Specific Nodes

In Rancher v1.6 setups, you could prevent services from being scheduled to specific nodes with the use of labels. In Rancher v2.x, you can reproduce this behavior using native Kubernetes scheduling options.

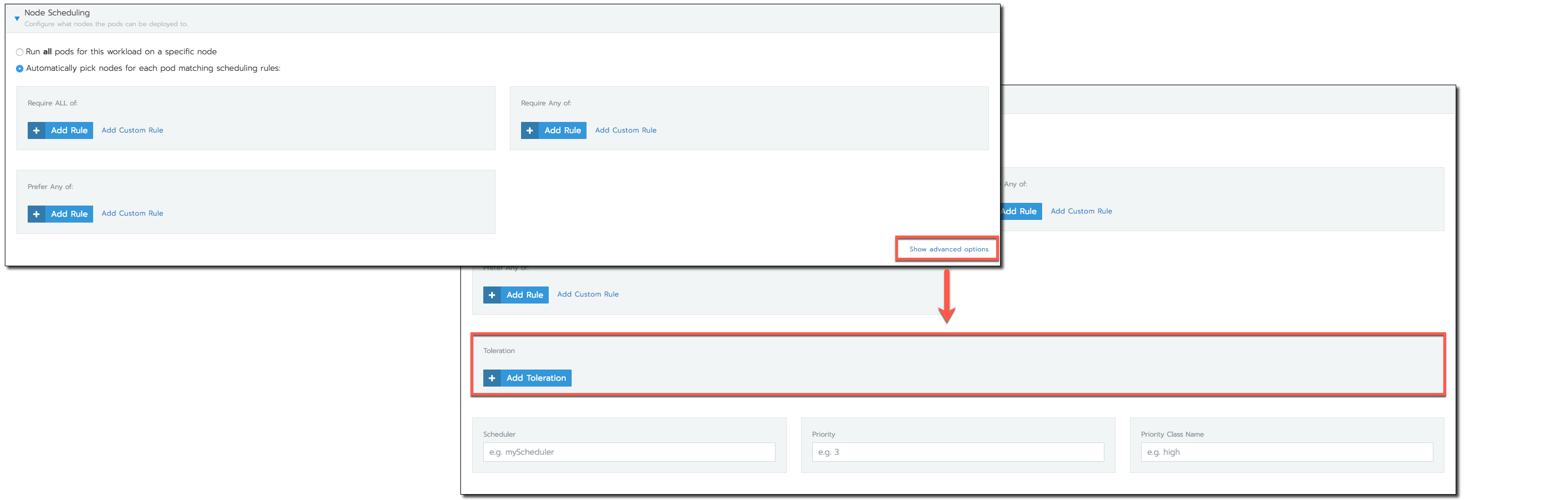

In Rancher v2.x, you can prevent pods from being scheduled to specific nodes by applying taints to a node. Pods will not be scheduled to a tainted node unless it has special permission, called a toleration. A toleration is a special label that allows a pod to be deployed to a tainted node. While editing a workload, you can apply tolerations using the Node Scheduling section. Click Show advanced options.

For more information, see the Kubernetes documentation on taints and tolerations.

Scheduling Global Services

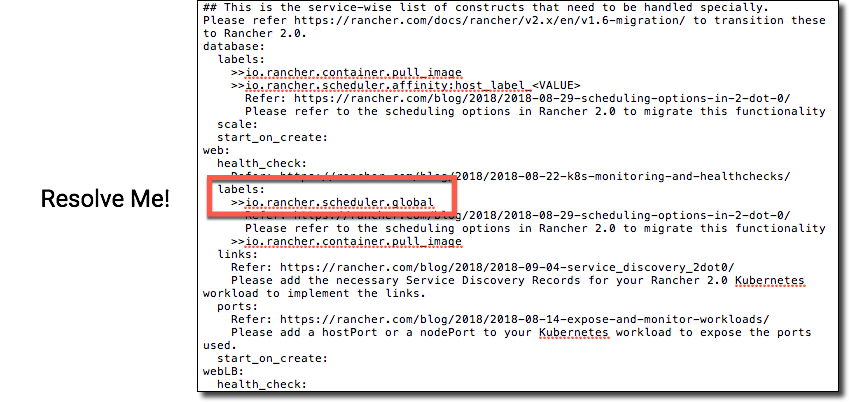

Rancher v1.6 included the ability to deploy global services, which are services that deploy duplicate containers to each host in the environment (i.e., nodes in your cluster using Rancher v2.x terms). If a service has the io.rancher.scheduler.global: 'true' label declared, then Rancher v1.6 schedules a service container on each host in the environment.

output.txt Global Service Label

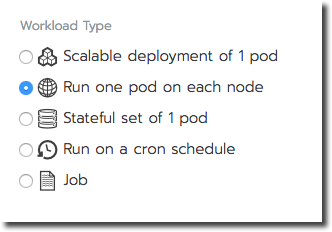

In Rancher v2.x, you can schedule a pod to each node using a Kubernetes DaemonSet, which is a specific type of workload ). A DaemonSet functions exactly like a Rancher v1.6 global service. The Kubernetes scheduler deploys a pod on each node of the cluster, and as new nodes are added, the scheduler will start new pods on them provided they match the scheduling requirements of the workload. Additionally, in v2.x, you can also limit a DaemonSet to be deployed to nodes that have a specific label.

To create a daemonset while configuring a workload, choose Run one pod on each node from the Workload Type options.

Scheduling Pods Using Resource Constraints

While creating a service in the Rancher v1.6 UI, you could schedule its containers to hosts based on hardware requirements that you choose. The containers are then scheduled to hosts based on which ones have bandwidth, memory, and CPU capacity.

In Rancher v2.x, you can still specify the resources required by your pods. However, these options are unavailable in the UI. Instead, you must edit your workload's manifest file to declare these resource constraints.

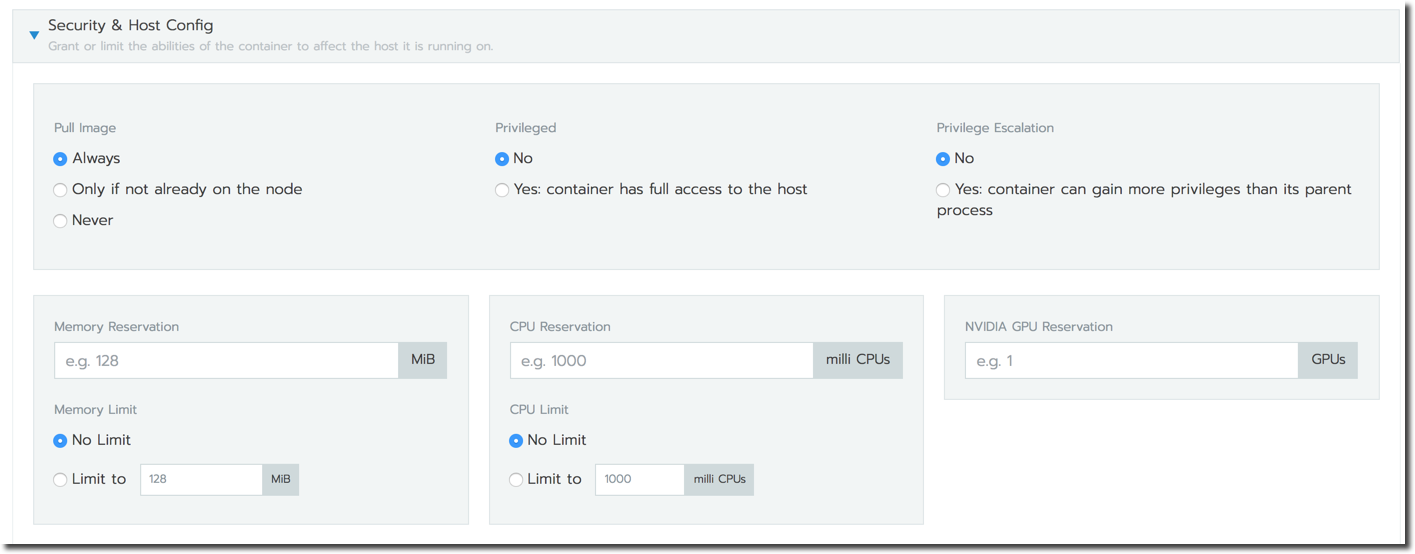

To declare resource constraints, edit your migrated workloads, editing the Security & Host sections.

To reserve a minimum hardware reservation available for your pod(s), edit the following sections:

- Memory Reservation

- CPU Reservation

- NVIDIA GPU Reservation

To set a maximum hardware limit for your pods, edit:

- Memory Limit

- CPU Limit

You can find more detail about these specs and how to use them in the Kubernetes Documentation.