Container Network Interface (CNI) Providers

What is CNI?

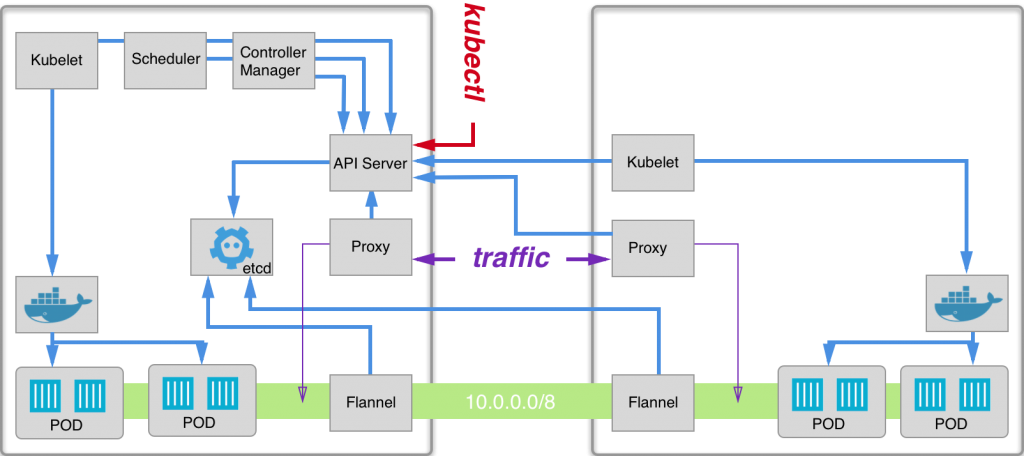

CNI (Container Network Interface), a Cloud Native Computing Foundation project, consists of a specification and libraries for writing plugins to configure network interfaces in Linux containers, along with a number of plugins. CNI concerns itself only with network connectivity of containers and removing allocated resources when the container is deleted.

Kubernetes uses CNI as an interface between network providers and Kubernetes pod networking.

For more information visit CNI GitHub project.

What Network Models are Used in CNI?

CNI network providers implement their network fabric using either an encapsulated network model such as Virtual Extensible Lan (VXLAN) or an unencapsulated network model such as Border Gateway Protocol (BGP).

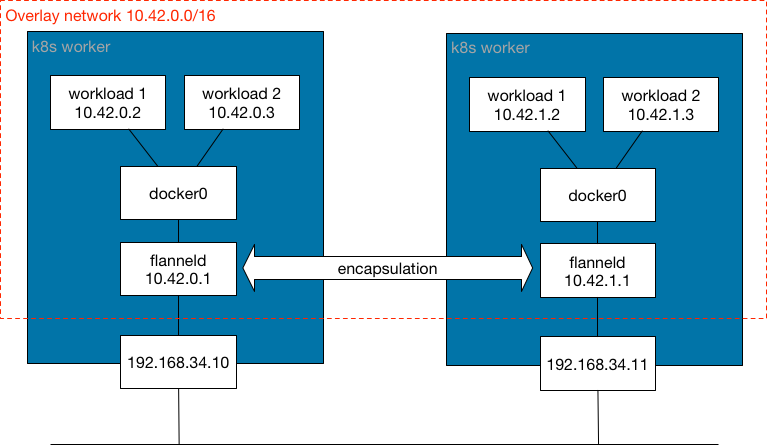

What is an Encapsulated Network?

This network model provides a logical Layer 2 (L2) network encapsulated over the existing Layer 3 (L3) network topology that spans the Kubernetes cluster nodes. With this model you have an isolated L2 network for containers without needing routing distribution, all at the cost of minimal overhead in terms of processing and increased IP package size, which comes from an IP header generated by overlay encapsulation. Encapsulation information is distributed by UDP ports between Kubernetes workers, interchanging network control plane information about how MAC addresses can be reached. Common encapsulation used in this kind of network model is VXLAN, Internet Protocol Security (IPSec), and IP-in-IP.

In simple terms, this network model generates a kind of network bridge extended between Kubernetes workers, where pods are connected.

This network model is used when an extended L2 bridge is preferred. This network model is sensitive to L3 network latencies of the Kubernetes workers. If datacenters are in distinct geolocations, be sure to have low latencies between them to avoid eventual network segmentation.

CNI network providers using this network model include Flannel, Canal, Weave, and Cilium. By default, Calico is not using this model, but it can be configured to do so.

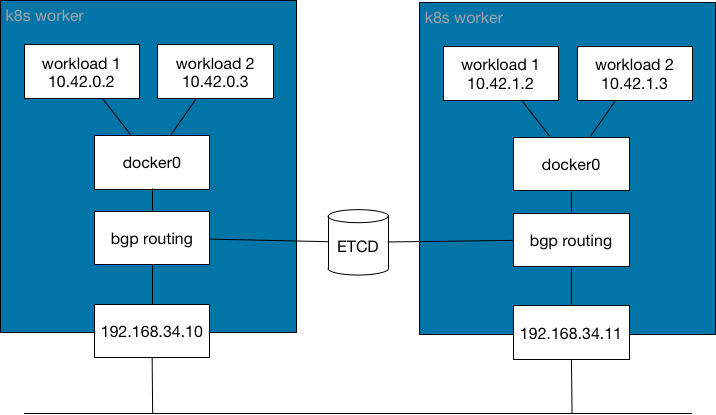

What is an Unencapsulated Network?

This network model provides an L3 network to route packets between containers. This model doesn't generate an isolated l2 network, nor generates overhead. These benefits come at the cost of Kubernetes workers having to manage any route distribution that's needed. Instead of using IP headers for encapsulation, this network model uses a network protocol between Kubernetes workers to distribute routing information to reach pods, such as BGP.

In simple terms, this network model generates a kind of network router extended between Kubernetes workers, which provides information about how to reach pods.

This network model is used when a routed L3 network is preferred. This mode dynamically updates routes at the OS level for Kubernetes workers. It's less sensitive to latency.

CNI network providers using this network model include Calico and Cilium. Cilium may be configured with this model although it is not the default mode.

What CNI Providers are Provided by Rancher?

RKE Kubernetes clusters

Out-of-the-box, Rancher provides the following CNI network providers for RKE Kubernetes clusters: Canal, Flannel, Calico, and Weave.

You can choose your CNI network provider when you create new Kubernetes clusters from Rancher.

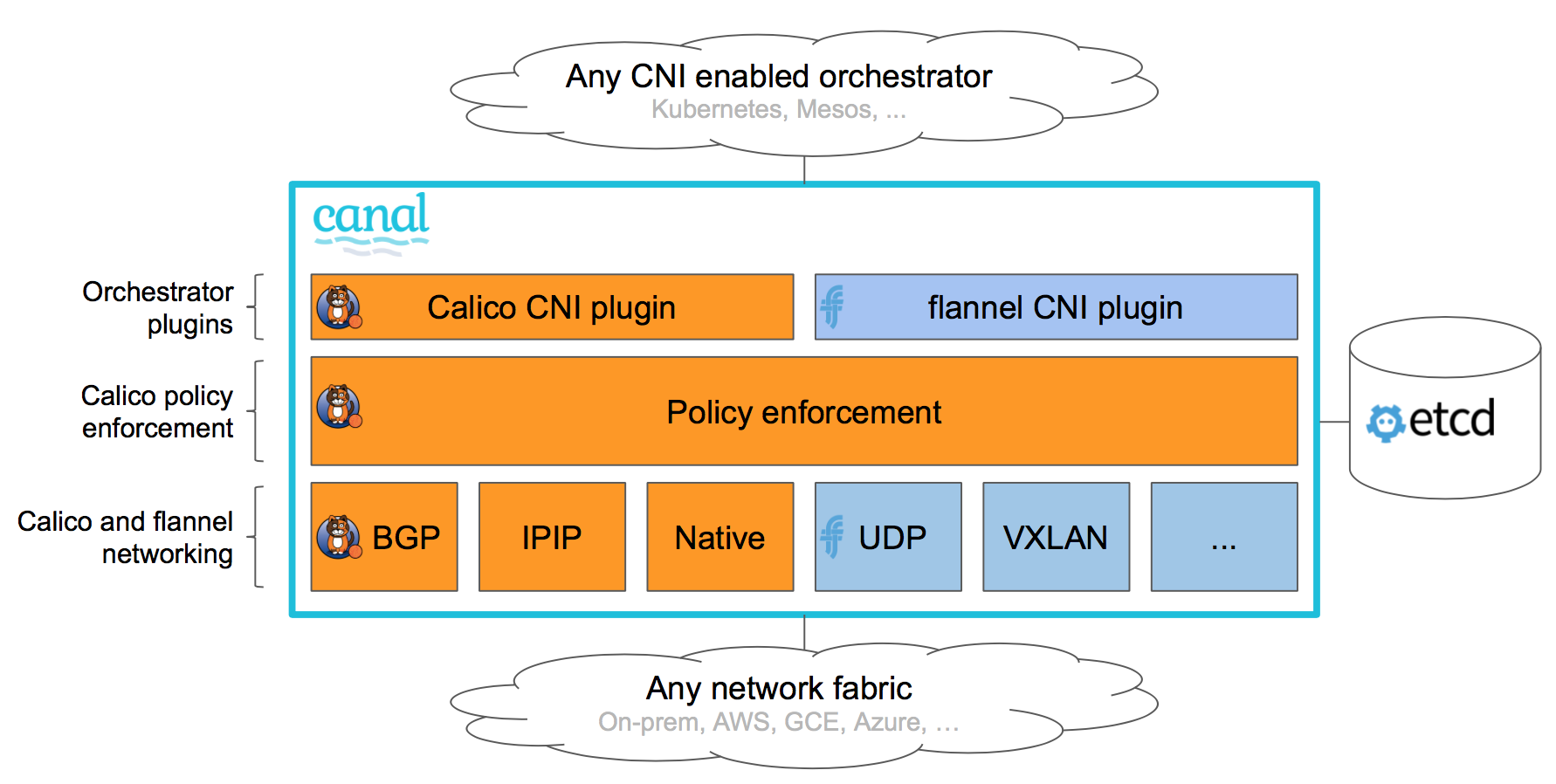

Canal

Canal is a CNI network provider that gives you the best of Flannel and Calico. It allows users to easily deploy Calico and Flannel networking together as a unified networking solution, combining Calico’s network policy enforcement with the rich superset of Calico (unencapsulated) and/or Flannel (encapsulated) network connectivity options.

In Rancher, Canal is the default CNI network provider combined with Flannel and VXLAN encapsulation.

Kubernetes workers should open UDP port 8472 (VXLAN) and TCP port 9099 (health checks). If using Wireguard, you should open UDP ports 51820 and 51821. For more details, refer to the port requirements for user clusters.

For more information, refer to the Rancher maintained Canal source and the Canal GitHub Page.

Flannel

Flannel is a simple and easy way to configure L3 network fabric designed for Kubernetes. Flannel runs a single binary agent named flanneld on each host, which is responsible for allocating a subnet lease to each host out of a larger, preconfigured address space. Flannel uses either the Kubernetes API or etcd directly to store the network configuration, the allocated subnets, and any auxiliary data (such as the host's public IP). Packets are forwarded using one of several backend mechanisms, with the default encapsulation being VXLAN.

Encapsulated traffic is unencrypted by default. Flannel provides two solutions for encryption:

- IPSec, which makes use of strongSwan to establish encrypted IPSec tunnels between Kubernetes workers. It is an experimental backend for encryption.

- WireGuard, which is a more faster-performing alternative to strongSwan.

Kubernetes workers should open UDP port 8472 (VXLAN). See the port requirements for user clusters for more details.

For more information, see the Flannel GitHub Page.

Weave

The Weave CNI plugin for RKE with Kubernetes v1.27 and later is now deprecated. Weave will be removed in RKE with Kubernetes v1.30.

Weave enables networking and network policy in Kubernetes clusters across the cloud. Additionally, it support encrypting traffic between the peers.

Kubernetes workers should open TCP port 6783 (control port), UDP port 6783 and UDP port 6784 (data ports). See the port requirements for user clusters for more details.

For more information, see the following pages:

RKE2 Kubernetes clusters

Out-of-the-box, Rancher provides the following CNI network providers for RKE2 Kubernetes clusters: Canal (see above section), Calico, and Cilium.

You can choose your CNI network provider when you create new Kubernetes clusters from Rancher.

Calico

Calico enables networking and network policy in Kubernetes clusters across the cloud. By default, Calico uses a pure, unencapsulated IP network fabric and policy engine to provide networking for your Kubernetes workloads. Workloads are able to communicate over both cloud infrastructure and on-prem using BGP.

Calico also provides a stateless IP-in-IP or VXLAN encapsulation mode that can be used, if necessary. Calico also offers policy isolation, allowing you to secure and govern your Kubernetes workloads using advanced ingress and egress policies.

Kubernetes workers should open TCP port 179 if using BGP or UDP port 4789 if using VXLAN encapsulation. In addition, TCP port 5473 is needed when using Typha. See the port requirements for user clusters for more details.

In Rancher v2.6.3, Calico probes fail on Windows nodes upon RKE2 installation. Note that this issue is resolved in v2.6.4.

To work around this issue, first navigate to

https://<rancherserverurl>/v3/settings/windows-rke2-install-script.There, change the current setting:

https://raw.githubusercontent.com/rancher/wins/v0.1.3/install.ps1to this new setting:https://raw.githubusercontent.com/rancher/rke2/master/windows/rke2-install.ps1.

For more information, see the following pages:

Cilium

Cilium enables networking and network policies (L3, L4, and L7) in Kubernetes. By default, Cilium uses eBPF technologies to route packets inside the node and VXLAN to send packets to other nodes. Unencapsulated techniques can also be configured.

Cilium recommends kernel versions greater than 5.2 to be able to leverage the full potential of eBPF. Kubernetes workers should open TCP port 8472 for VXLAN and TCP port 4240 for health checks. In addition, ICMP 8/0 must be enabled for health checks. For more information, check Cilium System Requirements.

Ingress Routing Across Nodes in Cilium

By default, Cilium does not allow pods to contact pods on other nodes. To work around this, enable the ingress controller to route requests across nodes with a `CiliumNetworkPolicy`.

After selecting the Cilium CNI and enabling Project Network Isolation for your new cluster, configure as follows:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: hn-nodes

namespace: default

spec:

endpointSelector: {}

ingress:

- fromEntities:

- remote-node

CNI Features by Provider

The following table summarizes the different features available for each CNI network provider provided by Rancher.

| Provider | Network Model | Route Distribution | Network Policies | Mesh | External Datastore | Encryption | Ingress/Egress Policies |

|---|---|---|---|---|---|---|---|

| Canal | Encapsulated (VXLAN) | No | Yes | No | K8s API | Yes | Yes |

| Flannel | Encapsulated (VXLAN) | No | No | No | K8s API | Yes | No |

| Calico | Encapsulated (VXLAN,IPIP) OR Unencapsulated | Yes | Yes | Yes | Etcd and K8s API | Yes | Yes |

| Weave | Encapsulated | Yes | Yes | Yes | No | Yes | Yes |

| Cilium | Encapsulated (VXLAN) | Yes | Yes | Yes | Etcd and K8s API | Yes | Yes |

Network Model: Encapsulated or unencapsulated. For more information, see What Network Models are Used in CNI?

Route Distribution: An exterior gateway protocol designed to exchange routing and reachability information on the Internet. BGP can assist with pod-to-pod networking between clusters. This feature is a must on unencapsulated CNI network providers, and it is typically done by BGP. If you plan to build clusters split across network segments, route distribution is a feature that's nice-to-have.

Network Policies: Kubernetes offers functionality to enforce rules about which services can communicate with each other using network policies. This feature is stable as of Kubernetes v1.7 and is ready to use with certain networking plugins.

Mesh: This feature allows service-to-service networking communication between distinct Kubernetes clusters.

External Datastore: CNI network providers with this feature need an external datastore for its data.

Encryption: This feature allows cyphered and secure network control and data planes.

Ingress/Egress Policies: This feature allows you to manage routing control for both Kubernetes and non-Kubernetes communications.

CNI Community Popularity

The following table summarizes different GitHub metrics to give you an idea of each project's popularity and activity levels. This data was collected in December 2025.

| Provider | Project | Stars | Forks | Contributors |

|---|---|---|---|---|

| Canal | https://github.com/projectcalico/canal | 720 | 97 | 20 |

| Flannel | https://github.com/flannel-io/flannel | 9.4k | 2.9k | 243 |

| Calico | https://github.com/projectcalico/calico | 6.9k | 1.5k | 400 |

| Weave | https://github.com/weaveworks/weave | 6.6k | 677 | 83 |

| Cilium | https://github.com/cilium/cilium | 23.5k | 3.6k | 1022 |

Which CNI Provider Should I Use?

It depends on your project needs. There are many different providers, which each have various features and options. There isn't one provider that meets everyone's needs.

Canal is the default CNI network provider. We recommend it for most use cases. It provides encapsulated networking for containers with Flannel, while adding Calico network policies that can provide project/namespace isolation in terms of networking.

How can I configure a CNI network provider?

Please see Cluster Options on how to configure a network provider for your cluster. For more advanced configuration options, please see how to configure your cluster using a Config File and the options for Network Plug-ins.